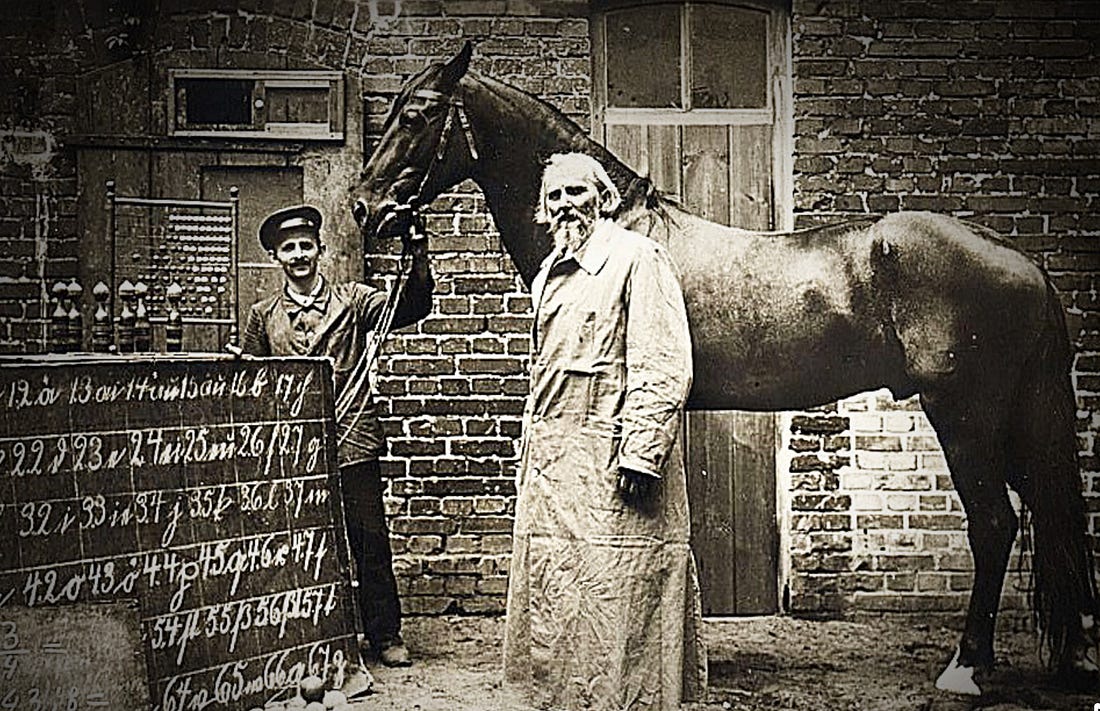

This is Brad DeLong's Grasping Reality—my attempt to make myself, and all of you out there in SubStackLand, smarter by writing where I have Value Above Replacement and shutting up where I do not… Wetware-Hardware Centaurs, Not Digital Gods: Wednesday MAMLMsFaster GPUs won’t conjure a world model from out of thin air we’re scaling mimicry, not understanding: that is my guess as to why the MAMLM frontier is spiky, with breathtaking benchmarks...Faster GPUs won’t conjure a world model from out of thin air we’re scaling mimicry, not understanding: that is my guess as to why the MAMLM frontier is spiky, with breathtaking benchmarks, baffling failures, real consequences…Models ace PhD quizzes, then misclick a checkout button and hallucinate a blazer and tie. If these systems are about to become “better than humans at ~everything,” why can’t they keep time on a roasting turkey?Without being built from studs up around a world model—durable representations of time, causality, and goals—frontier MAMLMs systems are high‑speed supersummarizers of the human record and hypercompetent counters at scale, yet brittle in embodied context, interfaces, and long‑horizon tasks. Calling it a “jagged frontier” misidentifies this unevenness, except to the extent it leads to permanent acceptance of centaur workflows where humans supply judgment and guardrails. Or so I guess. I really do not grok things like this. It seems obvious to me that “AI” as currently constituted—Modern Advanced Machine-Learning Models, MAMLMs, relying on scaling laws and bitter lessons—will not be “better than humans at ~everything” just with faster chips and properly-tuned GPUs and software stacks. Without world models, next‑token engines merely (merely!) draw on and summarize the real ASI, the Human Collective Mind Anthology Super‑Intelligence, and excel only where answers are clear or where counting at truly massive scale suffices: fast mimics—useful, but narrow. That seems obvious to me. But not to Helen Toner. And so Helen Toner stands across a vast gulf, among the serried ranks of AI-optimist singulatarians who believe that we are building our cognition betters, and face the “peak horse” problem that the steam engine, the internal-combustion engine, and the electric motor brought to the equine. And yet she is not on Team Artificial Super-Intelligence by 2030, not at all:

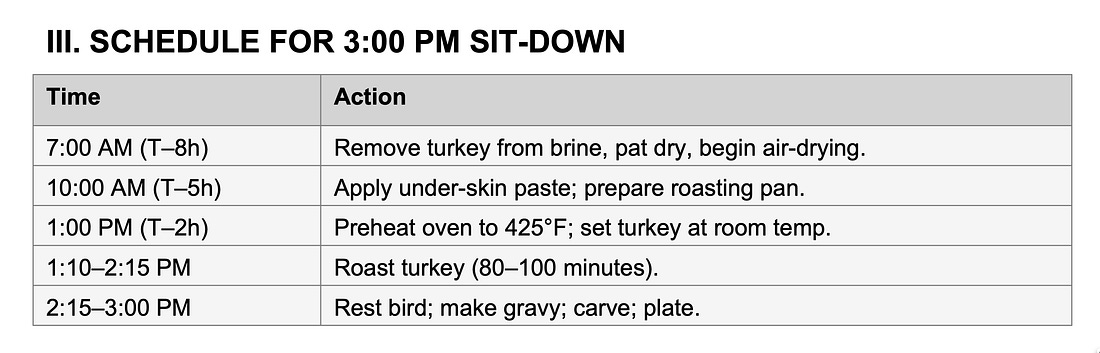

And yet I do not see this as confusing at all. What you have here is a function: prompts → next-word-continuations. (OK, next token.) This function answers the question: “What did the Typical Internet S***poster think was the next word in a stream-of-text situation like this one?” And as the prompt evolves recursively, which TIS it is copying from changes. And so all of a sudden we have not the same TIS who was not manning the cash-register, but a different TIS standing at the vending machine wearing a navy-blue blazer and a red tie waiting for a date. Thus there is thought behind the MAMLM’s choice of the net word to output. But the thought is that of the particular TIS who wrote the text-string in the training data that the LLM calculates was “like this” prompt. And the thought is not produced by a single mind across an entire output chunk, but rather assembled and sewn together like the monster of Victor von Frankenstein. Or take my most recent encounter with OpenAI’s frontier reasoning model: Put the turkey (spatchcocked, convection oven) in at 1:10 PM. Roast it for 80-100 minutes. Take it out at 2:15 PM. Whatever this is, it is not AGI. It is not “sparks of AGI”. It is Clever Hans—let me via a roiling boil of 3000-dimensional linear algebra output the word-sequences that I hope will please the human, without having any idea what the words mean, because I have zero world model, and so understand neither events nor time nor duration as concepts. Now it is a Clever Hans that can stamp its foot a billion times a second. It is a Clever Hans that can then be post-trained to remember detailed multi-thousand dimensional RLHF maps from problems to solutions. But, still, Clever Hans. It needs a human rider. Or perhaps it needs to be the hindquarters of a centaur. What is not confusing is that it fails. What is confusing, rather, to me, is that it succeeds so often without there being a scrap of a world model behind it. Toner claims:

And yet she remains fabulously optimistic about “AI”:

Again: I really do not grok it. As I said at the start, I am standing across a vast gulf from Helen Toner. She thinks it is obvious that with just a few more generations of Moore’s Law compression of doped silicon pathways and optimization of GPU hardware and software these shoggoths will be “better than humans at ~everything”. I think it obvious that without tearing them down to the studs and rebuilding them to understand the world from the get-go they will be limited to summarization front-ends to the real ASI, the Anthology Super-Intelligence of humanity’s collective mind binding space across the globe and time since the invention of writing in the year -3000, plus solving those problems that have definite and identified right answers plus those where we can see what the right answer is, but obtaining it requires counting things at immense scale. But then how are they so damned effective? It is clear that they are drawing on information already known to the real ASI—the Human Collective Mind Anthology Super-Intelligence—and only on that information. Where else could they learn stuff from, after all? All they see is the words people have written. There are times when I wonder if their apparent relative excellence simply arises from the fact that search engines have been poisoned by SEO, and by the focus of the master of Google at selling ads rather than providing the most effective internet-search information utility. But I wish I knew. I really wish I had a clue here. References:

If reading this gets you Value Above Replacement, then become a free subscriber to this newsletter. And forward it! And if your VAR from this newsletter is in the three digits or more each year, please become a paid subscriber! I am trying to make you readers—and myself—smarter. Please tell me if I succeed, or how I fail…#sweet-lesson |

Wetware-Hardware Centaurs, Not Digital Gods: Wednesday MAMLMs

Wednesday, 26 November 2025

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment