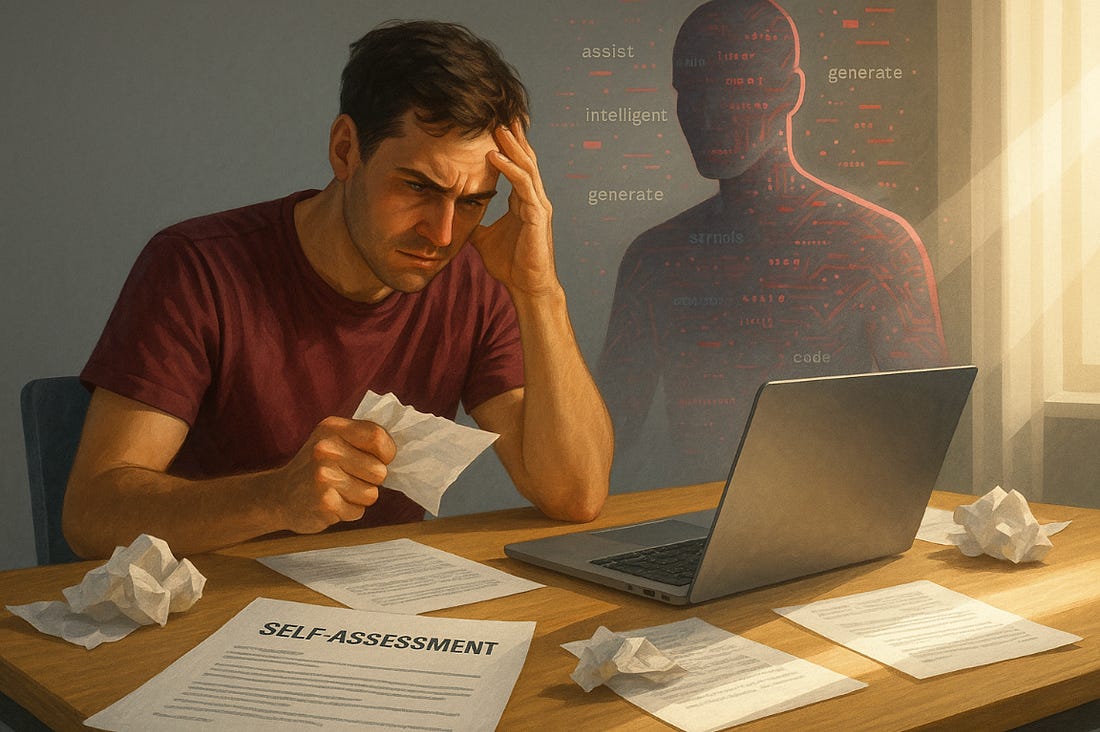

This is Brad DeLong's Grasping Reality—my attempt to make myself, and all of you out there in SubStackLand, smarter by writing where I have Value Above Replacement and shutting up where I do not… Using GPT LLM MAMLMs as Your Rabbit—Your PacerGPT LLM MAMLMs are not oracles, subordinates, or colleagues. They are emulations of TISs—Typical Internet S***posters. If you are at all a good writer, the most they can be is “rabbits” that, in...GPT LLM MAMLMs are not oracles, subordinates, or colleagues. They are emulations of TISs—Typical Internet S***posters. If you are at all a good writer, the most they can be is “rabbits” that, in races and in training, mark and keep the pace that you want to meet and then better. Yes, with a lot of work you can nudge the TIS-emulators into desirable configurations. But that is a lot of work, done at the wrong and at a very unreliable abstraction layer. Better to say “I can do better than that drivel…” and write it yourself…And looking at this yet again. Still so excellent on the way to use ChatGPT and its couins

As a workflow: dump notes into AI, and then look at the slop in disgust. Then delete and rewrite—perhaps paragraph by paragraph, perhaps all at once. the mush, then delete and rewrite—using the friction to surface your authentic articulation. If you are at all a good writer, you are then highly likely to find that you have something. After all, ChatGPT or Claude will, at bottom, give you only AI slop. They cn give you nothing other than what a TIS—a Typical Internet S***poster—would write. This is how these models work: they learn from the mass of online text and spit out some interpolation driven by its compressed internal model of typical word patterns. There are ideas buried in the word-patterns it produces, yes. But they are buried. Now GPT LLM boosters, at this point, begin talking frantically about RAG, RLHF, prompt engineering, data curation, feedback loops, careful data chunking and indexing, embedding optimization, hybrid search and reranking, supervised fine-tuning training from high-quality and human-curated examples, human reward modeling, proximal policy optimization, continuous feedback loops, custom base prompts, context injection, dynamic prompt templates, semantic chunking, metadata tagging, regular content updates, fine-tuning models on domain-specific data, and so on. Yes, these are ways to try to make the TIS that is the GPT LLM MALML behave better. As Andrej Karpathy once wrote:

These are patches, not solutions. Relying on software to mimic a TIS nudged into producing something less sloppy is a trick that almost never works. If you write at all well, AI will not be better than just a very rough draft. And step back. “Context engineering” is a lot of work. Unless you have a large number of documents or tasks that are parallel where you need to produce prose quickly at scale, and where you can nudge the TIS into a not-embarrassing configuration, you are doing the work, but at the wrong unreliable abstraction letter. Better to avoid that “context engineering” work, for it is massive, and focus on saying what you need to say to persuade your ultimate readers. And so the best course then is really to “let the hate flow through you. Delete whatever drivel it wrote and write something better, because you can definitely improve on that…” Of course, if your writing is currently worse than that of the TIS—or if you have to write in a language in which you are not fluent—AI can really help. ChatGPT and Claude and company can then produce text that can be clearer and less embarrassing. For weak writers, AI does powerfully lift the floor. And I see this democratization of the power to write serviceable prose as a very good thing. And if the words you write are not to persuade but to ticket-punch, to box-check, matters of boilerplate or ritual—things not to be seriously read but rather to fit into bureaucratic routine—the AI-slop generated from your notes may well be fine. References:

If reading this gets you Value Above Replacement, then become a free subscriber to this newsletter. And forward it! And if your VAR from this newsletter is in the three digits or more each year, please become a paid subscriber! I am trying to make you readers—and myself—smarter. Please tell me if I succeed, or how I fail…#using-gpt-llm-mamlms-as-your-rabbit |

Using GPT LLM MAMLMs as Your Rabbit—Your Pacer

Saturday, 22 November 2025

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment