The AI Productivity Paradox 🏃♂️Exploring why AI often falls short of expectations, and what good teams are doing to bridge the gap.Hey, Luca here! This is a weekly essay from Refactoring! To access all our articles, library, and community, subscribe to the full version: Last week I finally caught up with the latest DORA Research about AI. It is a massive, 140 pages doc, exploring the state of AI assisted development — and it gave me a lot to think about. By now, there are a lot of reports out there that try to figure out how engineering teams are doing with respect to AI. Broadly speaking, there are three main areas that are important to measure:

These work as increasing levels of maturity, and increasingly better proxies for true value: adoption by itself is not very useful, productivity is somewhat better, and impact is what we truly care about. The problem is: these topics are also in ascending order of how hard they are to measure. A lot of the available data is either fuzzy, or self-reported, or both, which makes it hard to trust. So today I want to cover some of the ideas that keep surfacing in these types of research, common pitfalls to avoid, and mental models I have seen the best teams use to trend in the right direction — the one that goes from “adoption is high”, to “impact is high”. Here is the agenda:

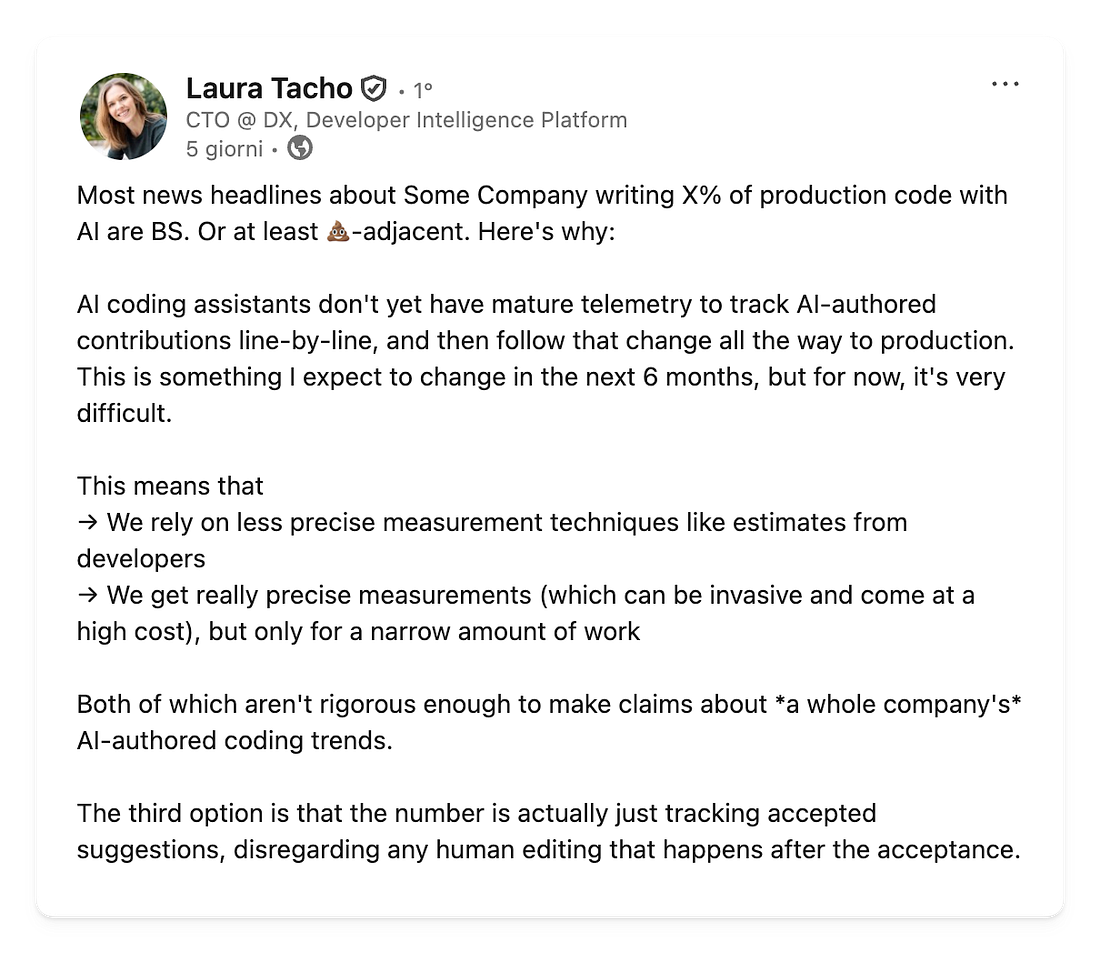

Let’s dive in! I discussed a lot of this with Doug Peete, CPO at Atono, with whom I already wrote this guide on creating a product engineering culture in summer. I am both a fan of Atono as a tool, and of how the team is openly sharing everything about how they work. As a Refactoring reader, you can get 75% off Atono for 24 months 👇 Disclaimer: even though Atono is a partner for this piece, I will only provide my transparent opinion on practices and tools we covered, Atono included. 💊 Productivity placeboOne of the first findings from the DORA report is that 90% of engineers regularly use AI for coding at work. This is unsurprising, but also doesn’t say much. As Laura Tacho noted a few days ago, with the current state of telemetry tooling, we can’t even reliably measure how much code is truly written by AI. Which leads us to the second problem, closely related to the first: productivity data is largely self-reported. In fact, the only way we can investigate AI productivity impact on a scale that goes beyond small experiments, is by asking developers how they feel about it — which DORA did, finding that they are happy about it. However, we also know that the only time we tried to measure productivity without trusting developers, by using proper control groups, we found that they thought they were more productive, while they were not. Why does this even happen? Why do people’s instincts get misguided? I love this take from a recent Cerbos article 👇

In other words, it’s productivity placebo. Has it ever happened to you to get into a multi-hour bug-fixing rabbit hole, try everything and nothing works, you give up for the day, and then the morning after you get back and are able to fix it in 5 minutes? This happens because the relentless bug fixing is like a fake state of flow. You get into a workflow that has a quick feedback loop (you change a small thing and immediately see if it works), and is cognitively easy to sustain, so you can go on for hours. But the reason why it feels easy is because you are not truly engaged. You are working on autopilot, and so, unsurprisingly, not making a lot of progress. There is a way to work with semi-autonomous AI agents that feels exactly like this. You feel productive, but you are not. So how do you escape it? To me it’s first and foremost about good work hygiene:

If you only read the titles of these items, these would be good pieces of advice for any coding session: they just happen to be even more true when AI is in the loop. So, in this case too, like in many others, AI works as an amplifier:

Now, even if you escape these traps and get truly more productive thanks to AI, there is a more advanced trap you need to take care of, which is that individual productivity doesn’t necessarily translate to team productivity 👇 ⛔ Bottlenecks...Subscribe to Refactoring to unlock the rest.Become a paying subscriber of Refactoring to get access to this post and other subscriber-only content. A subscription gets you:

|

The AI Productivity Paradox 🏃♂️

Wednesday, 26 November 2025

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment