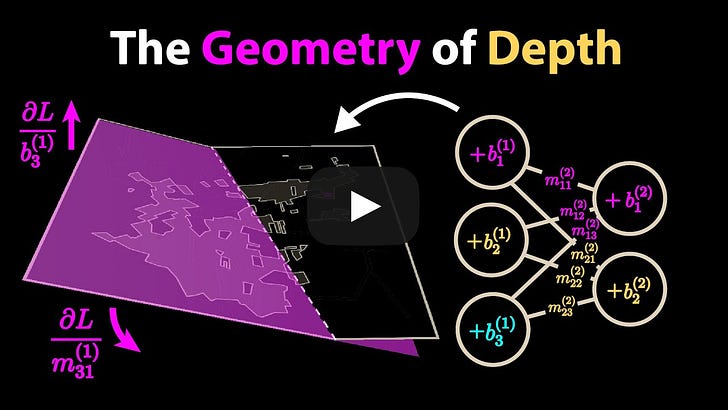

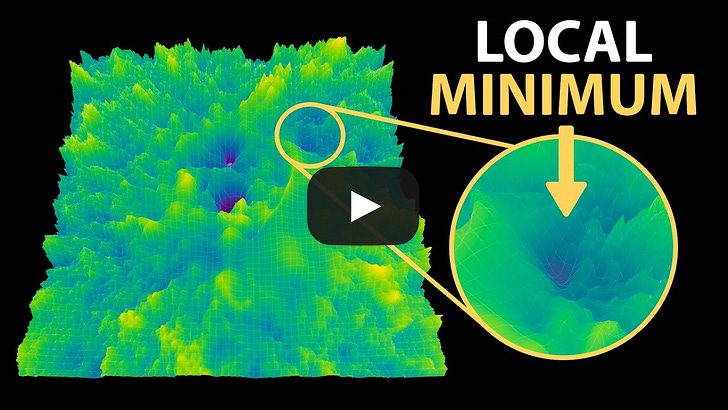

This is Brad DeLong's Grasping Reality—my attempt to make myself, and all of you out there in SubStackLand, smarter by writing where I have Value Above Replacement and shutting up where I do not… The Best Things I Have Found on the Unreasonable Effectiveness of Neural NetworksIt may simply be that I am in a very unusual position with respect to what I know & what I don't, but I found these three videos from Welch Labs to be incredibly enlightening with respect to the unreaIt may simply be that I am in a very unusual position with respect to what I know & what I don't, but I found these three videos from Welch Labs to be incredibly enlightening with respect to the unreasonable effectiveness of neural-network models…Folding space: How back-propagation and ReLUs can actually learn to fit pieces of the world. Intuition is actually possible! Geometrically, neural nets work not by magic, but by folding planes into shapes—again and again, at huge scale, in extraordinarily high numbers of dimensions. Addition of innumerable such shapes composes simple bends into complex functions, and back-propagation finds such functions that fit faster than it has any right to. But our geometrical intuition is limited. Our low‑dimensional brains misread the danger that a model might get “stuck” in a bad local minimum of the loss function, and not know which way to move to get to a better result. In large numbers of dimensions—when you have hundreds of thousands, or more of parameters to adjust what emerges looks to us low-dimensional visualizers as “wormholes.” As gradient descent proceeds, the proper shift in the slice you see reveals nearby, better valleys in the loss function down which the model can move. Your mileage may, and probably will, vary. But these visual intuitions click for me. And I can at least believe, even if not see, how things change when our vector spaces shift from three- to million-dimension ones, in which almost all vectors chosen at random are very close to being at right angles to each other. From Welch Labs: <https://www.youtube.com/@WelchLabsVideo/videos>. The first of these (which was the third made) three “How Models Learn” videos is the one I found most illuminating. As I understand its major points:

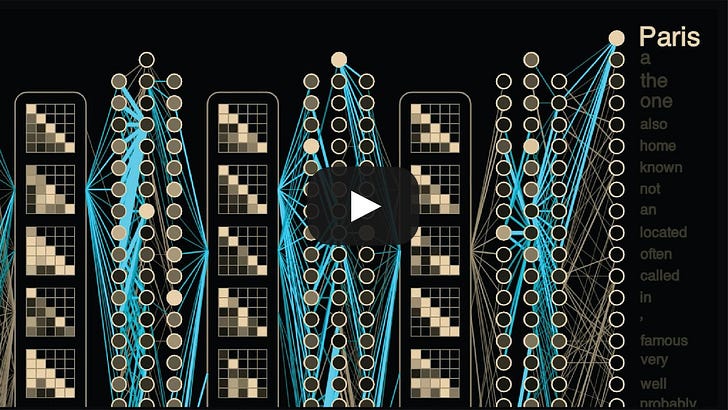

And here is the video:  <https://www.youtube.com/watch?v=qx7hirqgfuU&list=FLupRdJE0AjQUa3Ab-fo88NQ&index=13>  <https://www.youtube.com/watch?v=VkHfRKewkWw&t=1s> I learned less—but still a lot—that I could grasp and visualize from the second video, the one just above. Still, notably:

<https://www.youtube.com/watch?v=NrO20Jb-hy0> And still very much worth watching is the third video (the first made) above. Specifically:

Neural networks aren’t as mysterious when you try to translate them into geometry. Each ReLU nearal-network unit “folds” a plane; layers compose folds into intricate, piecewise‑linear shapes that carve real‑world boundaries. Yes, a single hidden layer can approximate anything—but trainability matters, and gradient descent finds good solutions vastly more reliably when depth compounds expressivity. Welch Labs’ visuals make this concrete: Belgium–Netherlands enclaves show how layered folds succeed. Start with a map: latitude, longitude, and a z‑axis of the degree of confidence the location is in Holland. Add neural-network ReLUs and watch each neuron fold space. And so simple bend, fold, shift, and add arithmetic neural-network unit by unit turns into true alchemy. And the unreasonable effectiveness of neural-network models at scale suddenly appears less unreasonable. If reading this gets you Value Above Replacement, then become a free subscriber to this newsletter. And forward it! And if your VAR from this newsletter is in the three digits or more each year, please become a paid subscriber! I am trying to make you readers—and myself—smarter. Please tell me if I succeed, or how I fail…#mamlms |

The Best Things I Have Found on the Unreasonable Effectiveness of Neural Networks

Monday, 15 September 2025

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment