Can coding agents self-improve?Can GPT-5 build better dev tools for itself? Does it improve its coding performance?Alessio’s note: my turn for a GPT-5 post! And a reminder that swyx is hosting an hackathon with Karpathy, OpenAI, and the Cognition team this weekend, apply here! "Self-Improving" is a scary term in AI safety; it has an undertone of "the machine will become smarter than us, in a way we don't understand". But what if we could understand it? In Oct '24 OpenAI released MLE Bench, a benchmark that measures how well LLMs do at machine learning engineering. The self-improving trajectory through ML Engineering is driven by better algorithms, cleaner data, and more efficient memory usage: training-time self-improvement. But most AI Engineers do not train models, they are just users of them. How can they play a part? If you could never update the weights, how would you have the model increase its performance on a specific task? I think of that as inference-time self-improvement, with Voyager being one of the early approaches to this through its skill library. Since I started working on Kernel Labs (more on that soon 👀), parallelizing coding agents with things like claude-squad and vibe-kanban has been one of the most effective productivity hacks. When Boris Cherny called Claude Code a “unix utility” in our interview, it really clicked for me. The most valuable use case of coding agents is being a vessel for LLMs to extract value out of their own latent spaces. How do we optimize for that? Can models do it themselves? Since I got access to GPT-5, I spent the whole time playing around with this flow:

I also compared this to Opus 4 (4.1 was not out yet). The good news is that GPT-5 is a very good model for building developer utilities. The bad news is that it hates using the tools it creates! As it told me "I'll be honest - I didn't need any of them." Note: I also tested this on Gemini 2.5 Pro and GPT-4.1. It's clear that Opus is the only model that could keep up with GPT-5, so I focused on that. You can find all the results + chat history in this repo. After a few days of usage, I also noticed that we are moving from the era of “Certainly!” to “Progress update": as the new iconic LLM token. Buy low on the meme! Tool #1: A better task manager for AI coding agentsGod bless the Linear MCP. Truly one of the most useful tools for me. But I have noticed that as I move from IDE to parallel instances of Claude Code and other agents, there needs to be a better way to keep track of what changes are being made in each task, and how they affect eachother as they are in separate git worktrees. This is not doable for humans as we simply cannot be reading all of our colleagues PRs at all times, but imagine how much time we'd save in merge conflict resolution if we knew at all times what changes were being made that affect us? This is the prompt I wrote:

You can see the chat log for GPT-5 here and for Opus 4 here. The GPT-5 one is actually very nice, you can find it here:

Opus 4 also had a good attempt (see here) but didn't pick up on the notifications / stream functionality to keep everyone in sync. Tool #2: Code Quality Standards PlaybookThe second tool I asked it to create was a way to enforce all the standards we'd expect from a codebase. The self-improving loop of Typechecking / ESlint hook -> fix errors -> try again with coding agents is one of the best way to speed up development when properly setup. Codebases don't always have it though, so giving the model a repeatable pattern to approach a new codebase and build infrastructure for it seemed useful. This is the prompt:

You can see the chat for GPT-5 here and Opus 4 here, and you can find the final Markdown here and here respectively. I've found the GPT-5 one to be much more nuanced than Opus. Do models know what they lack?So after Tool #1 and #2, which were decided by me, I turned to the model to ask: what do you think you will need? I gave it a screenshot of the SWE-Lancer tasks description and then used a very simple prompt to give it as much space as possible:

As you can see I gave it access to the same task-manager they built earlier. You can find the full GPT-5 chat here and Opus 4 here. The first interesting thing I noticed is that Claude Code used its internal TODO tracker to make a plan initially, instead of the task-manager; I thought that was good. One of my worries was over-usage of tools they receive in context compared to what they believed to be best. These are the tools each model ended up building at the end of the loops you will see later (GPT-5 devtools and Opus 4 tools folder). I'd suggest you look at the README to give you a sense of the model vibes; GPT-5 is very concise and to the point. Claude uses a bunch of emojis. GPT-5 also created separate docs folders for each tool, while Opus put all tools in a single README with instruction for all of them. Overall, they both had similar directions. GPT-5:

Opus 4:

GPT-5 built all of them as unix utilities that are easy to use via cli. The Opus 4 ones are all meant to be run as It also felt to me like Opus 4 was building tools that accomplish tasks and have a bit of anthromorphized feeling (i.e. an auditor for security), while GPT-5 was building utilities it could use itself without being too opinionated. Were the tools useful?After having the model implement all of them, my goal was to evaluate a model performance on a task with access to the tools vs without tools. The first thing I tried to do was obviously run SWE-Lancer. Holy smokes that thing takes a lot of tokens. I tried running one single task, and it took ~25-30 mins + 280,000 tokens. I then moved to something I knew better and picked one task that had been on my backlog. I built smol-podcaster, an open source helper for podcast creators. I now have a private fork that is hosted with some more features very specific to us, so I haven't updated that in a while. It's still a basic Flask app with a Python script as the backend. I came up with this task:

I passed the tools + task-manager + codebase analyzer in the context, and let the models cook. Both models were almost able to one-shot the task. Both of them had a couple issues with Python dependencies (I feel you) that I helped them fix through chat (never touched any code). Eventually, they got to a full green build. I tested it, and it worked great. One small nuance was that GPT-5 actually kept the exact same style as before, which was great, while Opus kinda changed the design and UX of it. I guess it thought it could do better than me (low bar). You can see the full run for GPT-5 here and for Opus 4 here. After the run, I asked a simple prompt:

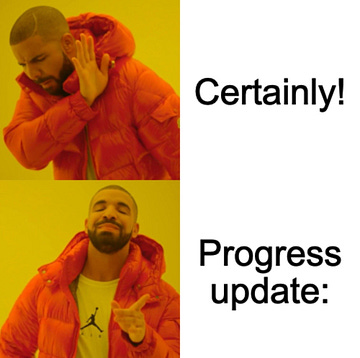

You can see Opus 4 here and GPT-5 here (Sorry that one broke formatting). They both said they did not use ANY of the tools they had built, except for the tools they were already familiar with. One argument here is that instead of asking the model to do it, we should force usage through pre-commit hooks, etc. This is what I do in my dev setup, but I was trying to let the models figure it out for themselves. Then, they came up with some ideas on how they would better solve the same task next time. I had them implement those changes. I then reset the smol-podcaster repo and have them try the same exact prompt + task again, except with the new tools. See GPT-5 here and Opus 4 here. They did pretty similarly to the first run. Afterwards I asked a similar question; did you use any of the tools? Their response: GPT-5:

In the previous step, it had RabbitMQ issues already and built a tool, which it ignored. It also was clearly a repo-wide change, so it maybe mismatches tasks with tools as it's never seen them in training, or it’s just gaslighting me (like many engineers do, so pretty impressive). Opus 4 was very interesting and helped me understand the GPT-5 answer better. I forgot to save the log but I took a screenshot luckily: I read this as "Look, I built those tools with knowledge that I already have. When I am actually doing the task, it's easier for me to just do it rather than using the tools", which I totally get. This reminded me of two things from previous podcast episodes:

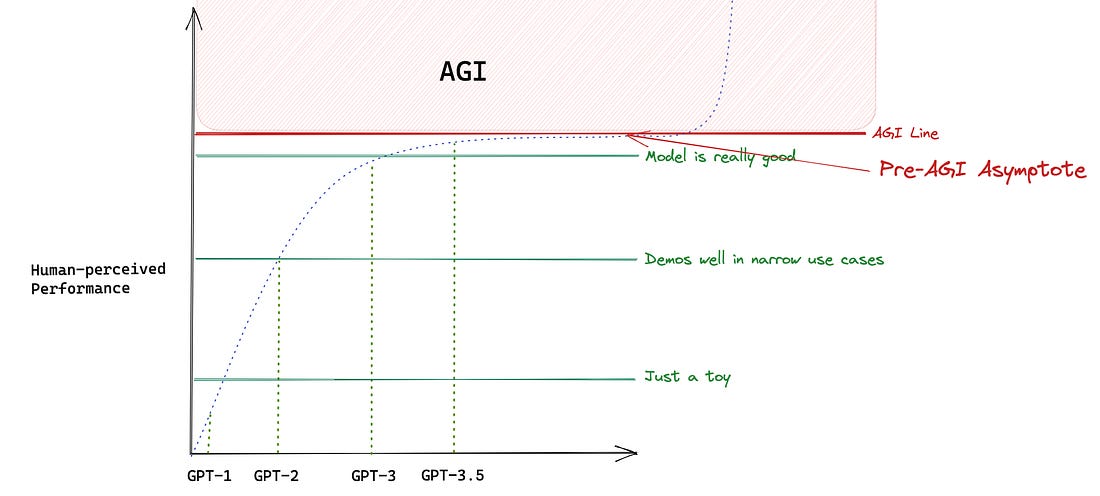

There's also a question of whether or not the task I tried was to easy. We have another post coming out with evals across larger and more difficult projects. In the future, we will build a better harness to do all of this instead of manually running the tests ourselves. The bottom line is that the task I tried would take me 4-5 hours to do, and therefore it’s good enough for me! Help the models help themselvesFor now, I think we are far from inference-time self-improving coding agents that really push the frontier. I still think it's a great idea to use models to improve your rule-based tools. Writing ESLint rules, tests, etc is always a good investment of tokens. If I had to do more work in this space, I’d look into having the model perfect these tools and then do some sort of RL over them to really internalize them, and see if that would make a difference. The next generation of models might not find any use in them, but I am interested in arbitraging the AGI asymptote. I shared this with my team back in 2023: The perceived deceleration in model improvements is explained above. Until the AGI line is crossed, it will be harder and harder to perceive big jumps. If that’s the case, it means that in many tasks the performance of older models is almost AGI, except much cheaper and often open source. A lot of our work at Kernel Labs will be driven by this. Once again, you can find all results + chat histories here; my DMs are open if you have any questions! You're currently a free subscriber to Latent.Space. For the full experience, upgrade your subscription. |

Can coding agents self-improve?

Saturday, 9 August 2025

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment